Research Bio

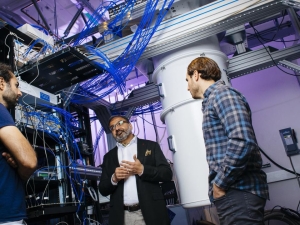

Hany Farid's research focuses on digital forensics, forensic science, misinformation, and human perception. We are living in an exciting digital age where nearly every aspect of our lives are being affected by breakthroughs in technology. At the same time, these breakthroughs have given rise to complex ethical, legal, and technological questions. Many of these issues arise from the inherent malleability of digital media that allows it to be so easily altered, and from the speed and ease with which material can be distributed online. Farid's lab has pioneered a new field of study termed digital forensics whose goal is the development of computational and mathematical techniques for authenticating digital media.

Farid received his undergraduate degree in Computer Science and Applied Mathematics from the University of Rochester in 1989, his Ph.D. in Computer Science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in Brain and Cognitive Sciences at MIT, he joined the faculty at Dartmouth College in 1999 where he remained until 2019. He is the recipient of an Alfred P. Sloan Fellowship, a John Simon Guggenheim Fellowship, and is a Fellow of the National Academy of Inventors.

Research Expertise and Interest

digital forensics, forensic science, misinformation, human perception

In the News

11 Things UC Berkeley AI Experts Are Watching for in 2026

Hany Farid and I School Ph.D. Students Featured on PBS Nova

New Research Combats Burgeoning Threat of Deepfake Audio

UC Noyce Initiative Advances Digital Innovation

Disinformation Is Breaking Democracy. Berkeley Is Exploring Solutions.

Hany Farid: To Limit Disinformation, We Must Regulate Internet Platforms

Disinformation Nation: What Can We Do To Crack the Code?

As Online Harms Surge, Our Better Web Initiative Advances at UC Berkeley

Taking Aim at Disinformation and Misinformation, Deans and Center Heads Reach Out Across Campus at UC Berkeley To Launch “Our Better Web”

Fighting back against coronavirus misinformation

Berkeley links up with Facebook, but wants to see tech giant’s accountability

Featured in the Media

LA's wildfires prompted a rash of fake images. NPR spoke to Hany Farid about the fakes and why they are so dangerous. “Fake images of an ongoing catastrophe can put people at risk, making evacuees unsure of where to reach safety. They can also undermine faith in bedrock institutions, from governments to news outlets,” Farid said.

Hany Farid, a professor at the School of Information and a leading expert on image and video manipulation, says that detecting deepfakes will take more than AI alone.

In hopes of stopping deepfake-related misinformation from circulating, Hany Farid, a professor and image-forensics expert, is building software that can spot political deepfakes, and perhaps authenticate genuine videos called out as fakes as well.

In a study published in Science Advances, Julia Dressel, who conducted the study for her Dartmouth College undergraduate thesis with Hany Farid, a computer science professor, found that small groups of randomly chosen people could predict whether a criminal defendant would be convicted of a future crime with about 67 percent accuracy, a rate virtually identical to Compas, software some American judges use to inform their decisions.