Bringing Clarity to Computational Imaging

New tool removes motion artifacts when imaging dynamic samples

Imaging microscopic samples requires capturing multiple, sequential measurements, then using computational algorithms to reconstruct a single, high-resolution image. This process can work well when the sample is static, but if it’s moving — as is common with live, biological specimens — the final image may be blurry or distorted.

Now, Berkeley researchers have developed a method to improve temporal resolution for these dynamic samples. In a study published in Nature Methods, they demonstrated a new computational imaging tool, dubbed the neural space-time model (NSTM), that uses a small, lightweight neural network to reduce motion artifacts and solve for the motion trajectories.

“The challenge with imaging dynamic samples is that the reconstruction algorithm assumes a static scene,” said lead author Ruiming Cao, a Ph.D. student in bioengineering. “NSTM extends these computational methods to dynamic scenes by modeling and reconstructing the motion at each timepoint. This reduces the artifacts caused by motion dynamics and allows us to see those super-fast-paced changes within a sample.”

According to the researchers, NSTM can be integrated with existing systems without the need for additional, expensive hardware. And it’s highly effective. “NSTM has been shown to provide roughly an order of magnitude improvement on the temporal resolution,” said Cao.

The open-source tool also enables the reconstruction process to operate on a finer time scale. For example, the computational imaging reconstruction process may involve capturing about 10 or 20 images to reconstruct a single super-resolved image. But using neural networks, NSTM can model how the object is changing during those 10 or 20 images, enabling scientists to reconstruct a super-resolved image on the time scale of one image rather than every 10 or 20 images.

“Basically, we’re using a neural network to model the dynamics of the sample in time, so that we can reconstruct at these faster time scales,” said Laura Waller, principal investigator of the study and professor of electrical engineering and computer sciences. “This is super powerful because you could improve your time scales by a factor of 10 or more, depending on how many images you were using initially.”

NSTM uses machine learning but requires no pre-training or data priors. This simplifies setup and prevents biases from potentially being introduced through training data. The only data the model uses are the actual measurements that it captured.

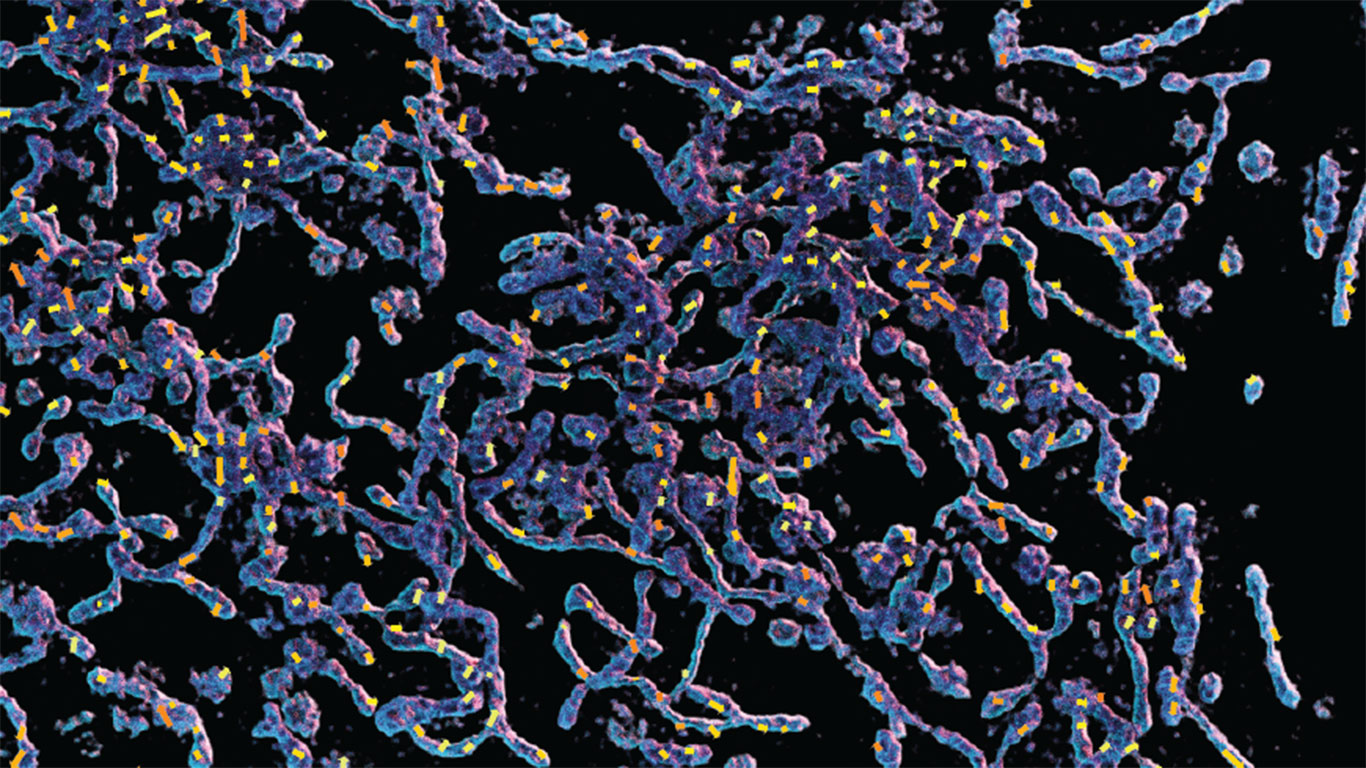

In the study, NSTM showed promising results in three different microscopy and photography applications: differential phase contrast microscopy, 3D structured illumination microscopy and rolling-shutter DiffuserCam.

But, according to Waller, “these are really just the tip of the iceberg.” NSTM could potentially be used to enhance any multi-shot computational imaging method, broadening its range of scientific applications, particularly in the biological sciences.

“It’s just a model, so you could apply it to any computational inverse problem with dynamic scenes. It could be used in tomography, like CT scans, MRI or other super-resolution methods,” she said. “Scanning microscope methods might also benefit from NSTM.”

The researchers envision NSTM someday being integrated in commercially available imaging systems, much like a software upgrade. In the meantime, Cao and others will work to further refine the tool.

“We’re just trying to push the limit of seeing those very fast dynamics,” he said.

Co-authors include postdoctoral researcher Nikita Divekar and assistant professors James Nuñez and Srigokul Upadhyayula, all from the Department of Molecular and Cell Biology.