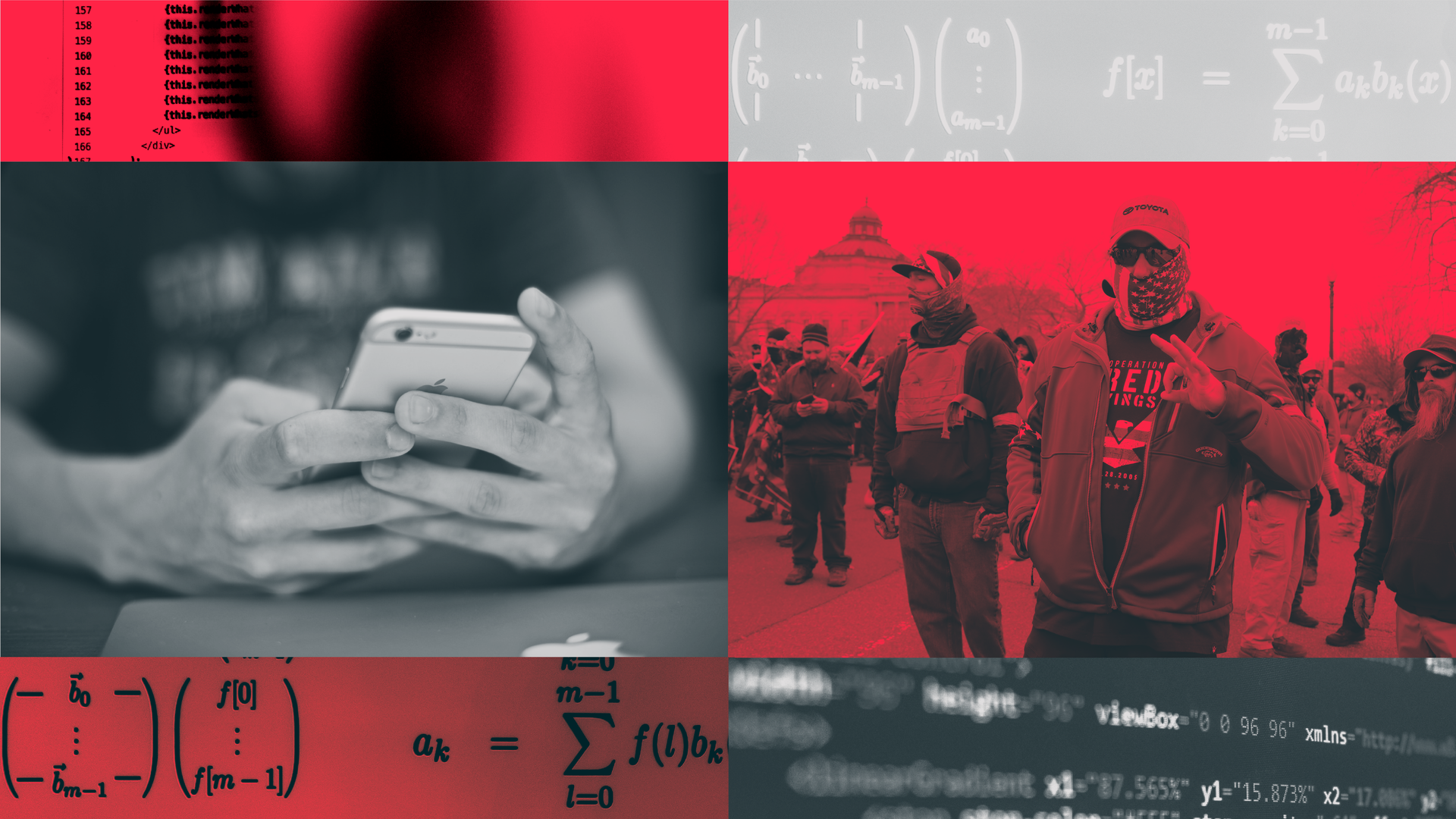

Disinformation Nation: What Can We Do To Crack the Code?

"Polarization, violence and social media are inextricably intertwined," says one scholar. But Berkeley researchers say regulation could be a powerful solution.

The 2024 presidential election is just a year away, but already there is an uneasy sense of déjà vu. While nominations are still being contested and outcomes are far from certain, it’s clear that fact-free allegations, conspiracy theories and more subtle manipulations will — again — shape and perhaps overwhelm the most important ritual in American democracy.

The light-speed advance of smart phones and social media since the turn of the century has put a powerful source of misinformation and disinformation in the pocket of virtually every American. But while millions of people in the U.S. and worldwide feel powerless against disinformation, a network of scholars at UC Berkeley is exploring its often covert dynamics and charting innovative ways to neutralize the toxic effects.

"Polarization, violence, and social media are inextricably intertwined," Jonathan Stray, senior scientist at the UC Berkeley Center for Human-Compatible AI, wrote bluntly in a recent article.

"We've got to change the online information ecosystem," says Hany Farid, an influential Berkeley researcher who probes the intersection of technology and society. "The vast majority of Americans are now getting their news from social media, and it’s even worse in other parts of the world. Young people 15 to 18 years old — when they want to know what’s happening in Ukraine, they’re not going to Google. They’re going to TikTok videos.

"This can't be good for the world."

Disinformation has always been with us — but why is it so potent today? At Berkeley, scholars across a range of disciplines are probing the corrosive effects of algorithms, machine learning and other exotic technologies operating below the surface of the information landscape.

The tools and tactics used by merchants of disinformation can be highly effective, both at shifting opinion and at generating profit. The impact has been evident in recent elections, in the long campaign against the COVID-19 virus, and now in wars in Ukraine and the Middle East.

In a series of interviews, the Berkeley researchers offered no easy solutions. Still, they said, it is possible to improve the information landscape.

Internet platforms could voluntarily tweak their systems to serve up less disinformation and make outrageous content less viral. Policymakers could provide the platforms with incentives to promote a healthier civic dialogue. Where incentives fail, they could employ tough regulation and the threat of costly legal liability. And, the scholars said, it’s essential for everyone to be educated to navigate a landscape of deliberate falsehoods.

Even such modest solutions would require enormous political will. But the need is increasingly urgent: With the accelerating advance of artificial intelligence, the scholars said, disinformation soon will be more convincing than ever.

The exotic technology that charts our innermost needs and desires

Our minds, individually and collectively, have become a battleground for the forces of modern information warfare, but most of us are barely aware of how these battles are fought.

So here’s the crucial first step: To change the information ecosystem, we have to understand it.

Compared to 20 years ago, Berkeley scholars say, there are more news media, and more of them are fiercely partisan. Some politicians, especially on the right, are distorting the truth and lying outright — with little consequence. Practically everyone has a smart phone, and they connect us to deep, interlaced networks of news, commentary and like-minded community. Social media weave those many streams into powerful rivers of unreliable content.

"We have to think about fringe platforms, like 4chan and 8chan, as the origin of some of these problems," said Brandie Nonnecke, director of the CITRIS Policy Lab and a professor at the Goldman School of Public Policy. They generate dubious information, "which makes its way through mainstream social media and up to larger platforms. But then CNN and Fox News start to replicate this stuff, and that’s where you have the big impact."

Underlying the visible information landscape is an infrastructure of exotic technology that tracks our personal details, our buying habits, our religious, cultural and political interests. The technology tracks when we comment or click ‘like’ on social media posts. It looks for patterns in our preferences.

“Platforms often incentivize conflict actors toward more divisive and potentially violence-inducing speech.”

Jonathan Stray

If you’re into women’s basketball, your social media “recommender system” might send you more posts about the WNBA. If you’re into Argentinian wines, the platform might offer you more posts about wine in South America. If you’re suspicious of vaccines and looking for alternative COVID treatments, the recommender system might steer you to info on ivermectin or hydroxychloroquine, even if there’s no evidence that those drugs are effective.

A system 'to keep us clicking like a bunch of monkeys'

Here’s another key principle: In effect, recommender systems often are programmed to engage human psychology at a primal level.

We’re wired to focus on threats, on things that are shocking or outrageous. We seek confirmation for our pre-existing biases. Confronted with false information, we’ll accept it as true if it fits our biases, or if we simply hear it often enough.

Stray explained that recommender systems try to figure out what we want to see by predicting what we will respond to. This is called "optimizing for engagement." But it’s a double-edged sword, he said, because "we respond not just to what is good and valuable but what is outrageous and flattering to our biases."

If social media engineers want to elicit more clicks, likes and comments from readers, they can program their systems to recommend the most provocative, outrageous content, said Farid, a professor in the Berkeley School of Information and the Department of Electrical Engineering and Computer Sciences.

He added: "If they decide, 'Look, let’s optimize for user engagement because that will optimize profit,' the algorithms are like, 'All right, whatever it takes.'

"If it means delivering images of dead babies, that's what the system is going to do. It doesn't have morality. … They’re just saying, 'Maximize user engagement, period.' And the algorithms are like, 'No problem! We’re going to figure out what drives human beings.'"

“It's the most dystopian thing ever: They literally turned off the algorithm called Good for the World, and they turned on the algorithm Bad for the World."

Hany Farid

That, Farid said, was the model Facebook used in an earlier era, when it was growing from 100 million users to 3 billion users in just a few years.

The algorithms that drove the recommender system "just wanted to deliver outrage," Farid said, "because that’s what kept us clicking like a bunch of monkeys."

In Stray's view, internet platforms don’t exploit us intentionally. Rather, he explained, "it's an unwanted side effect of trying to determine what is valuable to us. Where platforms are culpable is in paying insufficient attention to fixing these bad side effects."

Welcome to the rabbit hole

Research shows that in today’s political climate, far-right conflict sells. A 2021 study from Cybersecurity For Democracy found that hard-right accounts featuring a lot of disinformation generated user engagement at levels far above average.

"Platforms often incentivize conflict actors toward more divisive and potentially violence-inducing speech, while also facilitating mass harassment and manipulation," Stray and his co-authors wrote in an essay last month for the Knight First Amendment Institute at Columbia University. Other studies cited in their essay showed that when posts use emotional, moral language, or when they attack groups of perceived opponents, user engagement spikes.

Contained in those feedback loops are drivers of right-wing radicalization.

According to Farid, over 50% of Facebook users who join white supremacist groups were guided to those groups through content recommended by Facebook. Social media platforms are familiar with those dynamics, the Berkeley scholars say, but most of them choose to continue.

Farid described an experiment, carried out earlier this decade by Facebook, called "Good for the World, Bad for the World." In simple terms, the platform revised its algorithms to dial down conflict and outrage in favor of more positive themes.

"Then their news feed was nicer — less of the nastiness, less of the Nazis, less bad stuff," Farid recounted. "But you know what else happened? People spent less time at Facebook.

"So what did Facebook do?" he asked. "It's the most dystopian thing ever: They literally turned off the algorithm called Good for the World, and they turned on the algorithm Bad for the World. … They're not here to make the world a better place. They're here to maximize profits for their shareholders."

To solve disinformation, focus on the platforms

Facebook and Twitter (now rebranded as X) were wide-open conduits for disinformation — domestic and foreign — in the 2016 and 2020 U.S. presidential elections. They helped enable the Jan. 6, 2021, mob violence at the Capitol. Even at the height of the COVID pandemic, when thousands of Americans were dying every week, social media platforms carried volumes of disinformation on the supposed risk of government policies and vaccines that actually saved millions of lives.

Today, the new conflict in the Middle East has produced a global storm of disinformation, experts say.

Still, despite the unprecedented harm, there’s no quick fix. The U.S. Supreme Court, in various rulings, has held that constitutional protections for free speech allow a relatively broad right to lie.

It would be all but impossible to moderate content on all news and social media sites — the volume is too great. And of course, the truth is often ambiguous, and disinformation is often not obvious.

“I think we go down a precarious path if we start to have the government telling platforms what content they can and cannot carry.”

Brandie Nonnecke

Still, scholars like Nonnecke, Stray and Farid see a range of possible solutions to mitigate the harms of disinformation. And some of the most powerful potential solutions focus on social media recommender systems.

Nonnecke points to policies that social media can use to discourage disinformation and to slow its viral spread. For example, she explained, when social media put warning notices on suspect posts, or advise readers to read a full post before sharing it on their networks, that creates a "friction" that slows the spread of disinformation.

In his recent paper, Stray and his co-authors concluded that social media companies could have a significant positive impact just by weaving positive human values into their recommender systems.

The platforms could dial down the algorithms that advance negative content to maximize user engagement, the authors wrote. They could change their formulae so that "conflict entrepreneurs" would not have easy access to global distribution. They could rewrite the algorithms to promote content that encourages mutual understanding among diverse communities, and to support peace building over conflict in war zones.

What if social media companies say no?

Farid, Nonnecke and Stray all have close ties with Silicon Valley, and they emphasize the need for constructive engagement.

"It needs to be ... a partnership with the platforms," Nonnecke said. "I think we go down a precarious path if we start to have the government telling platforms what content they can and cannot carry."

Facing intense public and political pressure, social media have made some significant voluntary efforts — but most analysts say they don’t go far enough. And now many platforms are backsliding.

Since right-friendly entrepreneur Elon Musk purchased Twitter and rebranded it as X, the platform has abandoned many of those efforts. And in a new report, the European Union finds that among all social media platforms, X had the highest ratio of Russian propaganda, hate speech and other sorts of misinformation and disinformation, followed by Facebook.

Which raises a crucial question: How can society thwart disinformation when the internet platforms refuse to cooperate?

"We need government oversight," Farid said. "The solution here — and this is where it's going to get hard — is thoughtful, sensible regulation that changes the calculation for the social media companies, so that they understand we can't have this free-for-all where anything goes.

"That's what the government is supposed to do: Take my tax dollars and keep me safe. Deliver clean water and clean air. Keep the roads safe and make sure nothing kills me."

Prying open a 'black box'

Those are divisive words in the U.S. political environment, but the scholars described a spectrum of regulation, some that might be welcomed by the industry.

"Society should help platforms by taking some of the complex decision making out of their hands," Stray wrote. "Just as building designers have clear guidelines as to what safety standards are expected of them from society, so too could society provide clear guidance to (social media) companies as to what design patterns they need to follow."

One school of thought holds that consumers should shape their own recommender systems, dictating to social media their content preferences. That may sound workable initially, but Nonnecke sees risks.

"Will everybody want to eat their fruits and vegetables and have diverse content?" she asked. "Or are we just going to behave in the way that we always have, where we like to consume the content that aligns with our beliefs. … My worry is that if we give more control to users, people won't build it out in a way that is actually better for society."

"Will everybody want to eat their fruits and vegetables and have diverse content?" she asked. "Or are we just going to behave in the way that we always have, where we like to consume the content that aligns with our beliefs. … My worry is that if we give more control to users, people won't build it out in a way that is actually better for society."

Instead, Nonnecke points to regulations being adopted in Europe that seek a complex balance between freedom for businesses and protecting the well-being of people and democracy. Both she and Farid say the policies could be a good fit for the U.S.

Europe’s 2018 General Data Protection Regulation gives users far more control to block platforms from collecting their personal data. As a result, users in much of the world who check out a new webpage are offered the option, "Do Not Sell My Personal Information."

Initially, those privacy safeguards gave the platforms a rationale for keeping their algorithms secret. Now, new laws taking effect in the European Union will require the platforms to make their data available to verified researchers, but in a way that assures user privacy.

In effect, the laws will allow researchers and policymakers to crack open "a black box," Nonnecke said. "Let’s say a third-party researcher like me reports, 'Hey, I see that you're feeding up pro-anorexia content to young girls. I can see it — you're prioritizing this content to them.' ... The EU can then say to them, 'This is a child. You need to remove this content.'"

Incentives and threats: the power of public policy

Farid, too, sees the value of European policy innovation. The United Kingdom, Australia and other countries also are moving aggressively against the unchecked spread of disinformation.

But the U.S., he said, is "adrift at sea."

Farid believes that to check the harms of disinformation, U.S. policymakers must establish a forceful legal threat, and like others, he’s looking at Section 230 of the U.S. Communications Decency Act. The 1996 law currently protects platforms from liability for content produced by their users — such as teens who record themselves driving at top speeds — and this has become a flashpoint in the debate over how to reform online culture to protect democracy.

Farid’s position: If platforms promote content that causes harm, they should be legally responsible for the damages.

Stray and Nonnecke wouldn’t go that far. When a case challenging Section 230 came before the U.S. Supreme Court earlier this year, they joined an amicus curiae brief that urged the court to preserve Section 230 protections for recommender systems. Without that shield, the brief said, platforms might limit innovations — and even limit speech — out of fear of being sued.

The court declined to remove the protections provided under current law. That was seen as a win for Facebook, YouTube, X and others. But the issue is likely to come before the nation’s high court again, and efforts to strip social media of such protections may be gaining momentum in Congress and in state legislatures.

'These technologies will be us forever'

In a society that values innovation and free expression, finding solutions that balance many interests is complex. And yet, the need is acute.

"These systems are going to exist in some form or another for the rest of humanity's existence," Stray said. "So we have to decide: What is it that we want from them?"

Meanwhile, the risks are being sharpened by the rapid advance of artificial intelligence, which has given everyone with a computer and some basic skills incredible propaganda powers — to create increasingly more deceptive texts and "deepfake" photos and videos.

"It’s a really important moment," Nonnecke said. "With AI, the scriptwriting is a lot better. Voice simulation is getting a lot better. And then images — deepfakes are getting a lot better.

"Those three things together? I am afraid."