Literally Switching Strategies to Handle the Internet Data Flood

On its biggest sales day this year, Amazon sold more than 100 million items. Meanwhile, every second, Google received well over 50,000 queries.

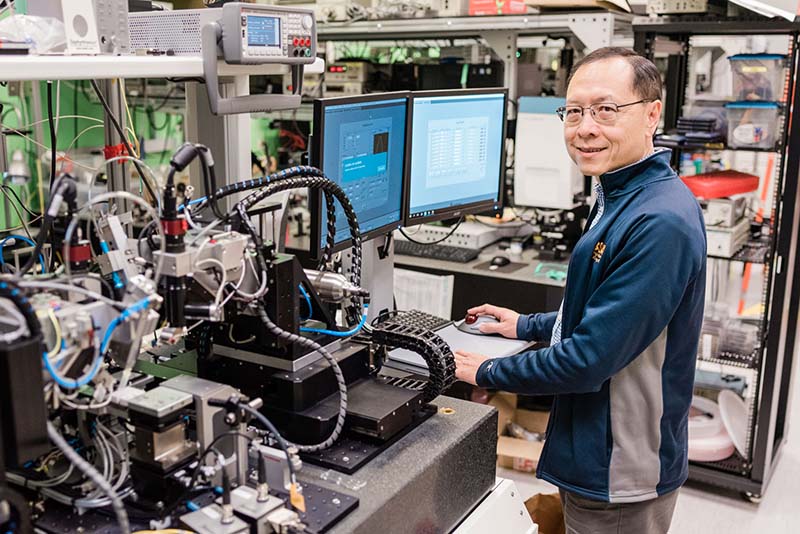

Photo: Mark Joseph Hanson

Cloud applications and the ever-increasing demand by large enterprises to transmit and analyze “big data” add to the flood, stretching the capacity of even the largest data center servers that collect, store, process, route and provide timely response to end users.

The demand on servers is growing exponentially, says Ming Wu, professor of electrical engineering and computer sciences. The volume threatens to overwhelm even “hyperscale” data center networks that use hundreds of thousands of servers to process and route traffic to the right destination.

Servers are interconnected by both optical fibers and electronic switches. Data races through the fiber optic cables to the switches which route the traffic. The flow then gets converted back to optical signals. Typically, for every bit of data streaming in to large data centers, about six times more data flows through to process the request.

The electronic switches become bottlenecks for large traffic flows, Wu says. The switches are good at directing relatively small packets of data, but they are reaching their limits in the largest data center server networks, where demand is highest.

Making them faster would require more efficient optical-to electrical and electrical-to-optical conversions – an increasingly difficult challenge as Internet traffic continues to soar. Cooling the hard-working switches also sucks up a lot of power.

Wu has invented an alternative – an optical, or photonic, switch capable of record-breaking speed and low power consumption. The optical switches can be fabricated as integrated circuits, so they can be mass-produced, keeping the cost per device low.

His research team has begun collaborating with some of the largest data centers on applications of the switch. He expects that his support from the Bakar Fellows Program will allow him to develop a practical switch prototype and help identify a foundry to fabricate them on a large scale, speeding commercialization.

Wu didn’t design the new switches to replace electronic ones, but to pair with them, allowing the fiber network connecting the switches and servers to change on the fly, adapting to the traffic pattern.

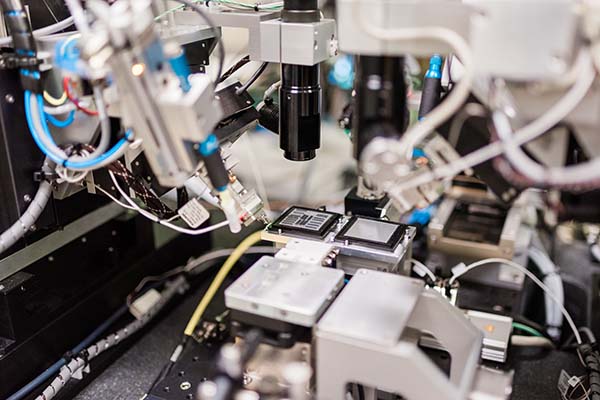

Photo: Mark Joseph Hanson

The key to his invention lies in reducing the loss of photons as they pass through the optical switches -- a major hurdle up to now in efforts to develop optical switches.

In Wu’s optical switch, light waves travel down two closely arrayed paths so that light can switch from one path to another – like changing lanes on a freeway, he says. Few photons are lost.

His research group developed the new switch in Berkeley’s Marvell Nanofabrication Lab, which Wu directs. The team employed micro-electro-mechanical systems, or MEMS – similar to sensors used in cell phone gyroscopes and many other devices – to guide the optical waves.

“The MEMS physically open a connection between the two light paths, like a draw bridge,” he says.

Some of Wu’s research on the new optical switch was supported by a Google Faculty Research Award – an indication of the invention’s commercial potential. He has also received funding from National Science Foundation and Department of Energy.

His switch concept has been patented. Another patent, now being examined, improves switch efficiency and performance. Others are being filed.

Moving a hardware invention from the lab to commercial use is much harder and more expensive than developing and marketing new software. The gap between the lab and industry is often called the Valley of Death.

In addition to helping him refine the switch and seek a manufacturer, the Bakar support is helping Wu explore production strategies with supply chains to assure the switches can make the leap from the lab to the real world.

“The Bakar support,” Wu says, “is helping us bridge the valley.”