$21.6 million funding from DARPA to build window into the brain

The Defense Advanced Research Projects Agency has awarded UC Berkeley $21.6 million over four years to create a window into the brain through which researchers — and eventually physicians — can monitor and activate thousands to millions of individual neurons using light.

The UC Berkeley researchers refer to the device as a cortical modem: a way of “reading” from and “writing” to the brain, like the input-output activity of internet modems.

“The ability to talk to the brain has the incredible potential to help compensate for neurological damage caused by degenerative diseases or injury,” said project leader Ehud Isacoff, a UC Berkeley professor of molecular and cell biology and director of the Helen Wills Neuroscience Institute. “By encoding perceptions into the human cortex, you could allow the blind to see or the paralyzed to feel touch.”

The researchers’ goal is to read from a million individual neurons and simultaneously stimulate 1,000 of them with single-cell accuracy. This would be a first step toward replacing a damaged eye with a device that directly talks to the visual part of the cerebral cortex, or relaying touch sensation from an artificial limb to the touch part of the cortex to help an amputee control an artificial limb.

“It is very clear that in any prosthetic device feedback is essential,” Isacoff said. “If you can directly feed sensory information into the cortex – what the sensory system would have done if it was still there – then you would have the fine closed-loop input-output needed for complex control.”

The project is one of six funded this year by DARPA’s Neural Engineering System Design program – part of the federal’s government’s Brain Initiative – to develop implantable, biocompatible “neural interfaces” that can compensate for visual or hearing deficits. The awards were announced this week.

Reading from and writing to the brain

While the researchers – neuroscientists, engineers and computer scientists – ultimately hope to build a device for use in humans, the researchers’ goal during the four-year funding period is to create a prototype to read and write to the brains of model organisms where neural activity and behavior can be monitored and controlled simultaneously. These include zebrafish larvae, which are transparent, and mice, via a transparent window in the skull.

To communicate with the brain, the team will first insert a gene into neurons that makes fluorescent proteins, which flash when the cell fires an action potential. This will be accompanied by a second gene that makes a light-activated “optogenetic” protein, which stimulates neurons in response to a pulse of light.

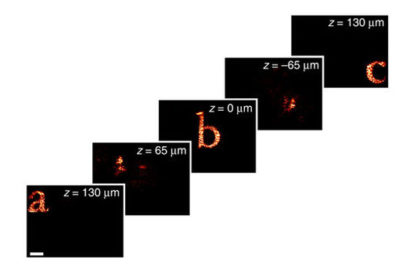

To read from and write to these neurons, a two-tiered device is required. The reading device they are developing is a miniaturized microscope that mounts on a small window in the skull and peers through the surface of the brain to visualize up to a million neurons at a time. This microscope is based on the revolutionary “light field camera,” which captures light through an array of lenses and reconstructs images computationally in any focus. The “light field microscope” will do the same to visualize vast numbers of neurons at different depths and monitor their activity.

For the writing component, they are developing a means to project light patterns onto these neurons using 3D holograms, stimulating groups of neurons in a way that reflects normal brain activity.

Finally, the team will develop computational methods that identify the brain activity patterns associated with different sensory experiences, hoping to learn the rules well enough to generate “synthetic percepts.”

Learning while building

Isacoff admits that the researchers have taken on a daunting task, since encoding of sensory perception at such a level of detail and such a large scale is really not understood. He and his neuroscientist colleagues will be learning as they build the devices.

“The kind of devices we are going to make will be incredible experimental tools for understanding brain function,” he said. “And then, once we learn enough about the system and how it works, we will actually have devices that are useful in many ways.”

Isacoff, who specializes in using optogenetics to study the brain’s architecture, can already successfully read from thousands of neurons in the brain of a larval zebrafish and simultaneously write to a similar number, using a large microscope that peers through the transparent skin of an immobilized fish.

During the next four years, team members will miniaturize the microscope, taking advantage of compressed light field microscopy developed by Ren Ng to take images with a flat sheet of lenses that allows focusing at all depths through a material. Several years ago, Ng, now a UC Berkeley assistant professor of electrical engineering and computer sciences, invented the light field camera.

In order to write to hundreds of thousands of neurons over a small depth, the team plans to use a technique developed by another collaborator, Valentina Emiliani at the University of Paris, Descartes. Her spatial light modulator creates three-dimensional holograms that can light up large numbers of individual cells at multiple depths under the cortical surface.

The compressed light field microscope and spatial light modulator will be shrunk to fit inside a cube one centimeter, or two-fifths of an inch, on a side.

“The idea is to miniaturize the input-output optical device so that it is small enough to be carried comfortably on the skull, with the ability to point light carefully enough so that it basically hits one nerve cell at a time, and allows us to rapidly change the pattern so that we can drive patterns of activity at the same rate that they normally occur,” Isacoff said.

Creating perceptions in the brain

One major limitation is that light penetrates only the first few hundred microns of the surface of the brain’s cortex, which is the outer wrapping of the brain responsible for high-order mental functions, such as thinking and memory but also interpreting input from our senses. This thin outer layer nevertheless contains cell layers that represent the visual input or touch sensations. Team member Jack Gallant, a UC Berkeley professor of psychology, has shown that its possible to interpret what someone is seeing solely from measured neural activity in the visual cortex.

“We’re not just measuring from a combination of neurons of many, many different types singing different songs,” Isacoff said. “We plan to focus on one subset of neurons that perform a certain kind of function in a certain layer spread out over a large part of the cortex, because reading activity from a larger area of the brain allows us to capture a larger fraction of the visual or tactile field.”

The combined read-write function will eventually be used to directly encode perceptions into the human cortex, such as inputting the perception of a visual scene to enable a blind person to see.

In announcing the award, Phillip Alvelda, the founding manager for the Neural Engineering System Design program, noted that the goal of communicating with one million neurons sounds lofty.

“A million neurons represents a miniscule percentage of the 86 billion neurons in the human brain,” he said. “Its deeper complexities are going to remain a mystery for some time to come. But if we’re successful in delivering rich sensory signals directly to the brain, NESD will lay a broad foundation for new neurological therapies. ”

The brain modem team includes 10 UC Berkeley faculty, along with researchers from Lawrence Berkeley National Laboratory, Argonne National Laboratory and the University of Paris, Descartes.

RELATED INFORMATION