Berkeley artificial intelligence (AI) expert Stuart Russell will lead a new Center for Human-Compatible Artificial Intelligence, launched this week.

Russell, a UC Berkeley professor of electrical engineering and computer sciences and the Smith-Zadeh Professor in Engineering, is co-author of Artificial Intelligence: A Modern Approach, which is considered the standard text in the field of artificial intelligence, and has been an advocate for incorporating human values into the design of AI.

The primary focus of the new center is to ensure that AI systems are beneficial to humans, he said.

The co-principal investigators for the new center include computer scientists Pieter Abbeel and Anca Dragan and cognitive scientist Tom Griffiths, all from UC Berkeley; computer scientists Bart Selman and Joseph Halpern, from Cornell University; and AI experts Michael Wellman and Satinder Singh Baveja, from the University of Michigan. Russell said the center expects to add collaborators with related expertise in economics, philosophy and other social sciences.

The center is being launched with a grant of $5.5 million from the Open Philanthropy Project, with additional grants for the center’s research from the Leverhulme Trust and the Future of Life Institute.

Russell is quick to dismiss the imaginary threat from the sentient, evil robots of science fiction. The issue, he said, is that machines as we currently design them in fields like AI, robotics, control theory and operations research take the objectives that we humans give them very literally. Told to clean the bath, a domestic robot might, like the Cat in the Hat, use mother’s white dress, not understanding that the value of a clean dress is greater than the value of a clean bath.

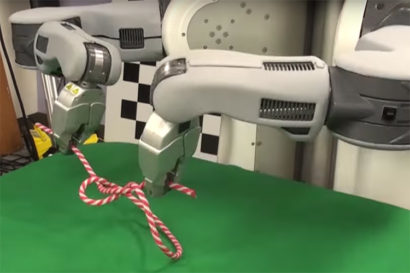

One approach Russell and others are exploring is called inverse reinforcement learning, through which a robot can learn about human values by observing human behavior. By watching people dragging themselves out of bed in the morning and going through the grinding, hissing and steaming motions of making a caffè latte, for example, the robot learns something about the value of coffee to humans at that time of day.

“Rather than have robot designers specify the values, which would probably be a disaster,” said Russell, “instead the robots will observe and learn from people. Not just by watching, but also by reading. Almost everything ever written down is about people doing things, and other people having opinions about it. All of that is useful evidence.”

Russell and his colleagues don’t expect this to be an easy task.

“People are highly varied in their values and far from perfect in putting them into practice,” he acknowledged. “These aspects cause problems for a robot trying to learn what it is that we want and to navigate the often conflicting desires of different individuals.”