With These Devices, the Doctor Is Always In

Berkeley engineer Rikky Muller explains how implantable and wearable technologies are redefining patient care

UC Berkeley’s Rikky Muller (Ph.D.’13 EECS), associate professor of electrical engineering and computer sciences, can still recall her first glimpse into the world of neurotechnology. At a conference nearly two decades ago, she saw something that didn’t seem possible: chips recording neural signals from the brain, then using those signals to control robotic arms.

“I think my head just exploded. I couldn’t even believe it,” she said. “Very quickly, I realized its potential to treat neurological disease — and the impact it could have on patients.”

Today, she continues to embrace that passion through her work at the Muller Lab, a research group focused on developing low-power, wireless microelectronic and integrated systems for neurological applications.

It’s work she’s eager to share — and recently, she spoke with Berkeley Engineering about her newest projects and their potential to transform the way we manage many common health conditions.

What major challenges do you face designing these types of devices?

My group develops translational medical devices to monitor, diagnose and treat neurological disorders. We build complete end-to-end systems that interact with the brain and peripheral nervous systems, combining sensors, integrated circuits, wireless technology and machine learning. As a hardware group, we’re tasked with making devices that are minimally invasive, ultra-low power and safe to implant in or wear on the body.

One challenge that we’re focusing on right now is making devices that are more intelligent and individualized. There’s a huge degree of variability between people, both in terms of the signals that we record and in terms of the responses to therapy — such as neurostimulation or drug delivery — so we really want to close the loop. We want to build devices that can make continuous observations, that extract biomarkers of aberrant states and that can autonomously determine the best therapy for a patient. Hopefully, that’s going to lead to better outcomes, faster timeframes and lower costs.

Are there unique challenges to designing a device for the human body?

The human body is a harsh environment for electronics. First, it’s made of water, which doesn’t mix well with electronics. Second, it’s always trying to reject foreign objects. Third, it’s sensitive to increases in temperature, which can result in tissue death. And fourth, it’s prone to infection.

So we need to make the electronics extremely small to reduce foreign body response and wireless to reduce infection risk. We also have to make them out of biocompatible materials, make sure that they’re flexible and compliant so that they don’t cause any tissue damage, and make sure they dissipate miniscule amounts of power so that they don’t chronically heat the tissue.

At the same time, we need to put a lot of functionality — and now intelligence — onto these devices, which is a major technical challenge.

Most recently, you used holograms to communicate with the brain. What insights about the brain do you hope to gain from this new neurotechnology?

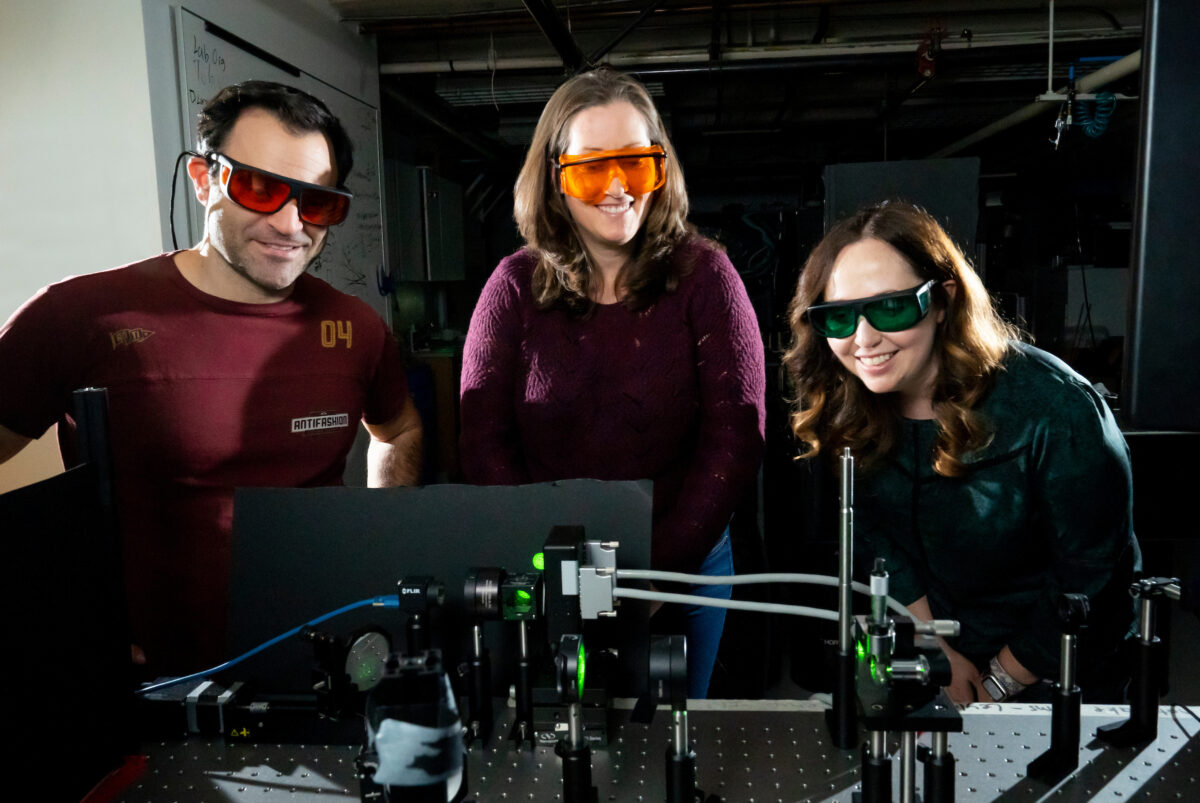

This project was done together with [EECS professor] Laura Waller and [associate professor of molecular and cell biology and of neuroscience] Hillel Adesnik. Note that we’re still very much developing this technology.

In just one cubic millimeter of the cerebral cortex, there are about 50,000 neurons. There is no tool available today that allows us to communicate bidirectionally with all 50,000 neurons and at their natural timescales of communication without making total Swiss cheese of the brain.

We’re now aiming to do that with light. The idea is that we can modify neurons to emit light signals through fluorescence and to be receptive to light signals — a technique known as optogenetics. We’re developing an instrument that generates points of light in 3D patterns, also known as point-cloud holograms. That allows us to shine light in specific places and time points to communicate with specific neurons without disturbing the neural tissue. We can also switch through these patterns quickly, enabling communication with a very large number of neurons in a small period of time.

Because we can switch very, very quickly, we can essentially talk to these neurons and get signals from them at the natural speeds at which they communicate. So you can think of this like a high-speed optical I/O to the brain.

There’s no instrument today that can do that for tens of thousands or hundreds of thousands of neurons, and that’s what we’re aiming to do. It could profoundly change our understanding of basic neuroscience, to be able to understand how neural circuits form, evolve and function — and how disease progresses.

You also worked on an earbud that prevents drowsy drivers from falling asleep. Where did the idea for this device come from? How does it work?

We know that there are actually tens of thousands of accidents and even deaths that occur each year just from drowsy driving. And drowsiness as an occupational hazard extends well beyond driving.

The idea for this device was inspired by the Apple AirPods. In 2017, I got my first pair, and my immediate thought was: What an incredible platform! We have to put electrodes on them and see what we can record. Could we actually record EEG from the ears?

There were other groups, including one main pioneering group at the time that was recording EEG from the ear canal. But this group was creating rigid, custom-molded devices, and we wanted to develop a comfortable, user-generic device, like AirPods, that anyone could easily wear in their ears.

So we developed earbuds made of flexible electronics. This was in collaboration with [EECS professor] Ana Arias. The design was based on a large database of human ear measurements. Flexible materials were needed so that the buds would have a comfortable fit while making good electrical contact with the ear canal. We also developed low-power wireless sensing electronics to interface with the earbuds. We benchmarked them against wet-scalp clinical EEG, the kind that’s used for monitoring seizures and sleep disorders.

We understood from the types of signals that we were able to record that features of drowsiness and sleep were going to be robust. We could detect eyes opening and closing, neural oscillations linked to relaxation, and more. We then partnered with the Ford Motor Company to conduct a study on drowsiness. Ultimately, we were able to train a machine-learning model to accurately detect drowsiness in a cohort of about 10 users using our device. Interestingly, the signals are so well stereotyped across users that we didn’t even have to train on an individual’s data. So it could be kind of an out-of-the-box detector.

Earlier this year [2025], you received ARPA-H funding for research into a bioelectronic device to help address obesity and diabetes. How might this device improve the way patients manage these health conditions?

A major theme in my lab is closing the loop around therapeutic devices, incorporating sensing to make sure that the therapy is doing what it’s supposed to be doing. We’re applying that idea to drug delivery.

Today’s GLP-1 [diabetes and weight-loss] drugs require a once-a-week injection. The dose remains in your body and fluctuates throughout the week until your next injection. Our aim is to use an implantable device to produce a sustained drug delivery dose over a long period of time.

While you can genetically engineer cells to synthesize biologic drugs like insulin and GLP-1s, you can also modify these cells to produce fluorescence proteins. Our implantable device will use fluorescence sensing to measure the delivered drug dose, enabling closed-loop control to ensure a consistent dosage.

What role do you think AI will play in advancing implantable medical devices and wearable technologies?

In terms of neurotechnology, for a long time we have had a need to train patient-specific decoders to interpret neural data. However, when you’re recording signals, there is a wide variation from person to person — every brain is different, locations of electrodes are different, that kind of thing.

Our ability to now put AI on the device or on a single chip will bring intelligence directly on the device itself, rather than having to stream out to a computer and do inference in the cloud. That’s going to push the field forward, since [individual] devices will be able to make autonomous decisions in real time, in situ.

In my lab, we think of it as having a tiny doctor in the device. I really believe that’s the future.