Step by Step Berkeley Robots Learn to Walk on Their Own in Record Time

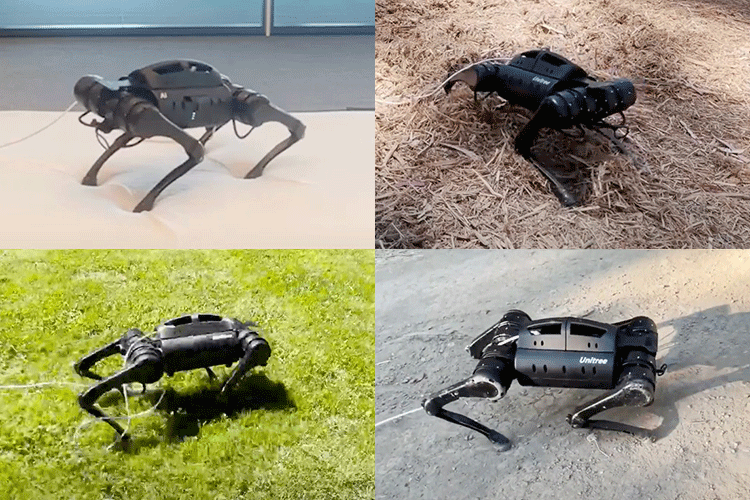

Berkeley researchers may be one step closer to making robot dogs our new best friends. Using advances in machine learning, two separate teams have developed cutting-edge approaches to shorten in-the-field training times for quadruped robots, getting them to walk — and even roll over — in record time.

In a first for the robotics field, a team led by Sergey Levine, associate professor of electrical engineering and computer sciences, demonstrated a robot learning to walk without prior training from models and simulations in just 20 minutes. The demonstration marks a significant advancement, as this robot relied solely on trial and error in the field to master the movements necessary to walk and adapt to different settings.

“Our work shows that training robots in the real world is more feasible than previously thought, and we hope, as a result, to empower other researchers to start tackling more real-world problems,” said Laura Smith, a Ph.D. student in Levine’s lab and one of the lead authors of the paper posted on arXiv.

In past studies, robots of comparable complexity required several hours to weeks of data input to learn to walk using reinforcement learning (RL). Often, they also were trained in controlled lab settings, where they learned to walk on relatively simple terrain and received precise feedback about their performance.

Levine’s team was able to accelerate the speed of learning by leveraging advances in RL algorithms and machine learning frameworks. Their approach enables the robot to learn more efficiently from its mistakes while interacting with its environment.

Levine, Smith and Ilya Kostrikov, a co-author of the paper and postdoctoral scholar in Levine’s lab, placed the robot in unstructured, outdoor environments to demonstrate. From a standing position, the robot takes its first steps and, after a few wobbles, learns to walk consistently, as seen, for example, in this video of the robot on local fire trails.

According to Smith, the robot explores by moving its legs and observing how its actions affect its movement. “Using feedback about whether it is moving forward, the robot quickly learns how to coordinate the movements of its limbs into a naturalistic walking gait,” she said. “While making progress up the hill, the robot can learn from its experience and hone its newfound skill.”

A different team at Berkeley, led by Pieter Abbeel, professor of electrical engineering and computer sciences, took another approach to helping a four-legged robot teach itself to walk. As shown in this video, the robot starts on its back and then learns how to roll over, stand up and walk in just one hour of real-world training time.

The robot also proved it could adapt. Within 10 minutes, it learned to withstand pushes or quickly roll over and get back on its feet.

Abbeel and his researchers employed an RL algorithm named Dreamer that uses a learned-world model. This model is built with data gathered from the robot’s ongoing interactions with the world. The researchers then trained the robot within this world model, with the robot using it to imagine potential outcomes, a process they call “training in imagination.”

In a paper posted on arXiv, Abbeel and his team looked at how the Dreamer algorithm and world model could enable faster learning on physical robots in the real world, without simulators or demonstrations. Philipp Wu, Alejandro Escontrela and Danijar Hafner were co-lead authors for this paper, and Ken Goldberg, professor of industrial engineering and operations research and of electrical engineering and computer sciences, was a contributing author.

A robot can use this model of the world to predict the outcome of an action and to determine what action to take based on its observations. This model will continue to improve and become more nuanced as the robot explores the real world.

“The robot sort of dreams and imagines what the consequences of its actions would be and then trains itself in imagination to improve, thinking of different actions and exploring a different sequence of events,” said Escontrela.

In addition, the dog robot must figure out how to walk without any resets from the researchers. Even if the robot takes a bad fall in the process of exploring or is pushed down, and recovery seems impossible, it still must try to recover on its own without any intervention. “It’s up to the robot to learn,” said Escontrela.

These latest studies by both teams demonstrate how quickly RL algorithms are evolving, with UC Berkeley research teams driving innovation. Last year, Jitendra Malik, professor of electrical engineering and computer sciences and a research scientist at the Facebook AI Research group, debuted an RL strategy called rapid motor adaptation (RMA). With RMA, robots learn behavior using a simulator and then rely on that learned behavior to generalize to real-world situations.

The approach was a dramatic improvement over existing learning systems, and the latest RL approaches used by Levine’s and Abbeel’s teams provide yet another step forward by enabling the robots to learn from their real-world experience.

“Any machine-learning model will inevitably fail to generalize in some conditions, normally when they differ significantly from its training conditions,” said Smith. “We are studying how to allow the robot to learn from its mistakes and continue to improve while it is acting in the real world.”

Not relying on simulators also offers practical benefits, such as greater efficiency. “The main takeaway is that you can do reinforcement learning directly on real robots in a reasonable timeframe,” said Abbeel.

Both research teams plan to continue teaching robots increasingly complex tasks. Someday, we might see quadruped robots accompanying search and rescue teams or assisting with microsurgery.

“It’s worth noting how amazing all these different learning methods are,” said Escontrela. “Ten to 20 years ago, people were manually designing controllers to specify leg movement. But now the robot can learn this behavior purely from data and a simple reward signal — and continue learning into the future. In 10 years, we’ll say that all this work was baby steps, literally.”