Particle Physics Turns to Quantum Computing for Solutions to Tomorrow’s Big-Data Problems

Giant-scale physics experiments are increasingly reliant on big data and complex algorithms fed into powerful computers, and managing this multiplying mass of data presents its own unique challenges.

To better prepare for this data deluge posed by next-generation upgrades and new experiments, physicists are turning to the fledgling field of quantum computing to find faster ways to analyze the incoming info.

In a conventional computer, memory takes the form of a large collection of bits, and each bit has only two values: a one or zero, akin to an on or off position. In a quantum computer, meanwhile, data is stored in quantum bits, or qubits. A qubit can represent a one, a zero, or a mixed state in which it is both a one and a zero at the same time.

By tapping into this and other quantum properties, quantum computers hold the potential to handle larger datasets and quickly work through some problems that would trip up even the world’s fastest supercomputers. For other types of problems, though, conventional computers will continue to outperform quantum machines.

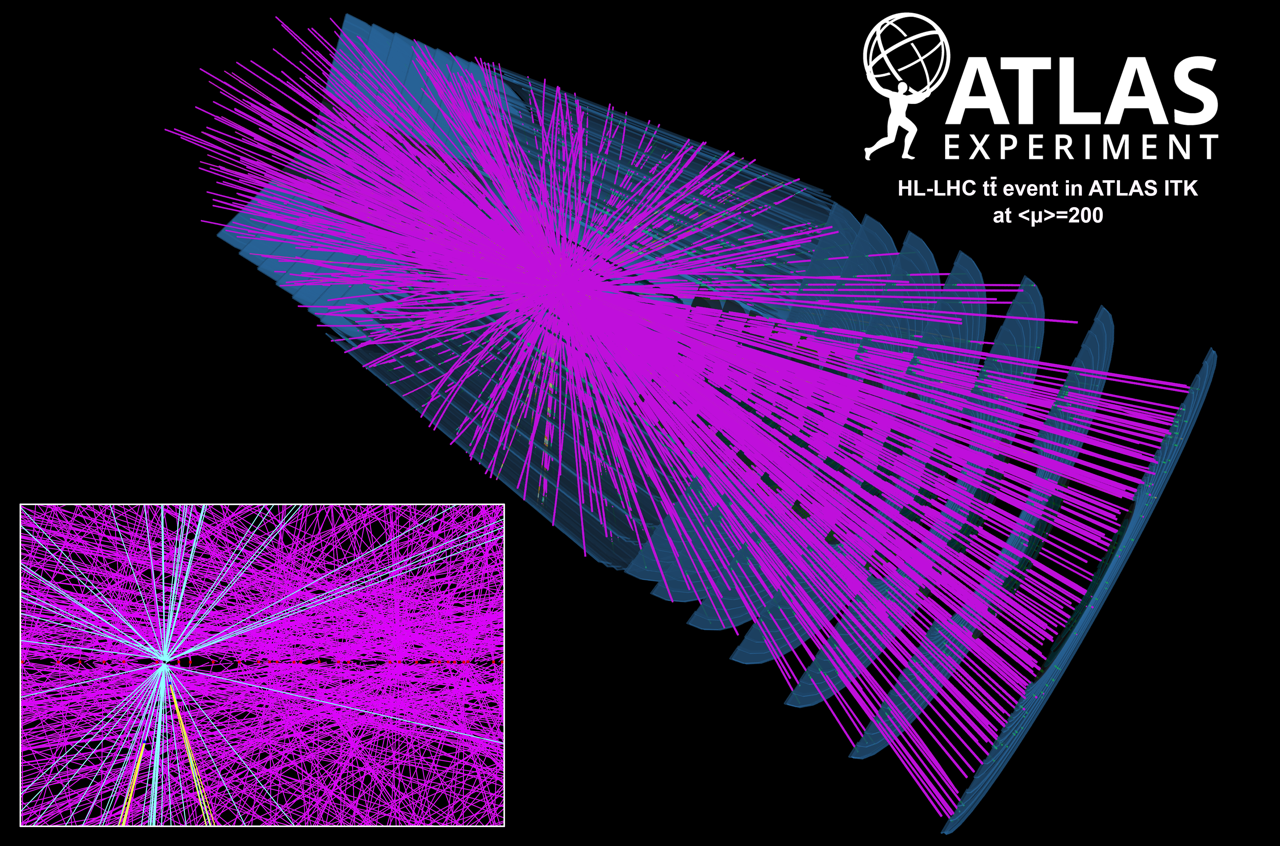

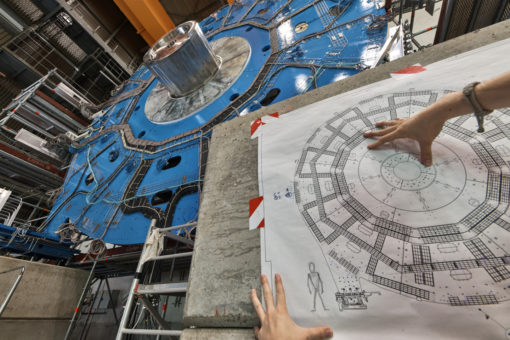

The High Luminosity Large Hadron Collider (HL-LHC) Project, a planned upgrade of the world’s largest particle accelerator at the CERN laboratory in Europe, will come on line in 2026. It will produce billions of particle events per second – five to seven times more data than its current maximum rate – and CERN is seeking new approaches to rapidly and accurately analyze this data.

In these particle events, positively charged subatomic particles called protons collide, producing sprays of other particles, including quarks and gluons, from the energy of the collision. The interactions of particles can also cause other particles – like the Higgs boson – to pop into existence.

Tracking the creation and precise paths (called “tracks”) of these particles as they travel through layers of a particle detector – while excluding the unwanted mess, or “noise” produced in these events – is key in analyzing the collision data.

The data will be like a giant 3D connect-the-dots puzzle that contains many separate fragments, with little guidance on how to connect the dots.

To address this next-gen problem, a group of student researchers and other scientists at the U.S. Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) have been exploring a wide range of new solutions.

One such approach is to develop and test a variety of algorithms tailored to different types of quantum-computing systems. Their aim: Explore whether these technologies and techniques hold promise for reconstructing these particle tracks better and faster than conventional computers can.

Particle detectors work by detecting energy that is deposited in different layers of the detector materials. In the analysis of detector data, researchers work to reconstruct the trajectory of specific particles traveling through the detector array. Computer algorithms can aid this process through pattern recognition, and particles’ properties can be detailed by connecting the dots of individual “hits” collected by the detector and correctly identifying individual particle trajectories.

Heather Gray, an experimental particle physicist at Berkeley Lab and a UC Berkeley physics professor, leads the Berkeley Lab-based R&D effort – Quantum Pattern Recognition for High-Energy Physics (HEP.QPR) – that seeks to identify quantum technologies to rapidly perform this pattern-recognition process in very-high-volume collision data. This R&D effort is funded as part of the DOE’s QuantISED (Quantum Information Science Enabled Discovery for High Energy Physics) portfolio.

The HEP.QPR project is also part of a broader initiative to boost quantum information science research at Berkeley Lab and across U.S. national laboratories.

Other members of the HEP.QPR group include: Wahid Bhimji, Paolo Calafiura, and Wim Lavrijsen. Berkeley Lab postdoctoral researcher Illya Shapoval, who helped to establish the HEP.QPR project and explored quantum algorithms for associative memory as a member of the group, has since joined a Fundamental Algorithmic Research for Quantum Computing project. Bhimji is a big data architect at Berkeley Lab’s National Energy Research Scientific Computing Center (NERSC). Calafiura is chief software architect of CERN’s ATLAS experiment and a member of Berkeley Lab’s Computational Research Division (CRD). And Lavrijsen is a CRD software engineer who is also involved in CERN’s ATLAS experiment.

Members of the HEP.QPR project have collaborated with researchers at the University of Tokyo and from Canada on the development of quantum algorithms in high-energy physics, and jointly organized a Quantum Computing Mini-Workshop at Berkeley Lab in October 2019.

Gray and Calafiura were also involved in a CERN-sponsored competition, launched in mid-2018, that challenged computer scientists to develop machine-learning-based techniques to accurately reconstruct particle tracks using a simulated set of HL-LHC data known as TrackML. Machine learning is a form of artificial intelligence in which algorithms can become more efficient and accurate through a gradual training process akin to human learning. Berkeley Lab’s quantum-computing effort in particle-track reconstruction also utilizes this TrackML set of simulated data.

Berkeley Lab and UC Berkeley are playing important roles in the rapidly evolving field of quantum computing through their participation in several quantum-focused efforts, including The Quantum Information Edge, a research alliance announced in December 2019.

The Quantum Information Edge is a nationwide alliance of national labs, universities, and industry advancing the frontiers of quantum computing systems to address scientific challenges and maintain U.S. leadership in next-generation information technology. It is led by the DOE’s Berkeley Lab and Sandia National Laboratories.