HYPPO: Leveraging Prediction Uncertainty to Optimize Deep Learning Models for Science

In the computing world, optimization comes in all shapes and sizes, from data collection and processing to analysis, management, and storage. And calls for optimization tools applicable to a growing range of science and technology R&D efforts are emerging all the time.

New solutions can be found in deep learning modeling, which has attracted the attention of the scientific research community for applications ranging from studying climate change and cosmological evolution to tracking particle physics collisions and deciphering traffic patterns. This trend has prompted a parallel need for optimization tools that can enhance deep learning models and training to improve their predictive capabilities and accelerate time-consuming computer simulations.

Leveraging support from the Laboratory Directed Research and Development (LDRD) program at Lawrence Berkeley National Laboratory (Berkeley Lab), a team of researchers in the Computing Sciences Area has developed a new software tool for conducting hyperparameter optimization (HPO) of deep neural networks while taking into account the prediction uncertainty that arises from using stochastic optimizers for training the models. Hyperparameters define the architecture of a deep neural network and include the number of layers, nodes per layer, batch size, learning rate, etc. Dubbed “HYPPO,” this open-source package is designed to optimize the architectures of deep learning models specifically for scientific applications.

“With HPO, the key thing is uncertainty quantification,” said Vincent Dumont, a postdoctoral researcher in the Center for Computational Sciences and Engineering at Berkeley Lab and lead author on a paper introducing HYPPO presented at the 2021 IEEE/ACM Workshop on Machine Learning in High Performance Computing Environments (MLHPC) in November. “We are looking into the accuracy of the models we are evaluating, especially for scientific applications. You want to make sure that the next time someone wants to reproduce the results that come out of a model, it is stable.”

“In machine learning, one of the questions that comes up is you have to decide what deep learning model you want to use and what is the architecture,” added Juliane Mueller, a staff scientist in the Applied Mathematics and Computational Research Division at Berkeley Lab and a co-author on the MLHPC paper. “There is this inherent uncertainty in deep learning model predictions and outcomes that simply results from using stochastic solvers under the hood. If you don’t take that into account when trying to solve your problem, you might come up with a model that happens to work well but no one can reproduce how well it performs.”

Adaptive Surrogate Models

“When evaluating the performance of a deep learning architecture (defined by the hyperparameters), you first need to train it,” Mueller said. “This can take sometimes minutes, sometimes hours, sometimes even longer depending on how much data you have and how big your deep learning model is. So we wanted to reduce the number of architectures being tried.”

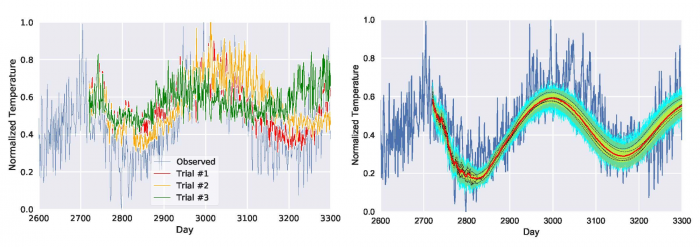

To this end, HYPPO uses adaptive surrogate models. A surrogate model is an approximate model that can sufficiently represent a high-fidelity model but at much decreased computational cost. In HYPPO, it is used to approximate the deep learning model’s performance, and thus allows an outcome of interest to be easily computed. In this way, the neural net’s prediction variability can be accounted for and reliable deep learning models can be found. “The adaptive surrogate models in HYPPO are basically what drives our optimization algorithm,” Mueller said. “They allow us to make performance predictions for untried hyperparameters given the data that has been observed so far, and these performance predictions guide the iterative hyperparameter selection.”

Each time a new architecture is evaluated, “you update the surrogate model and it becomes more accurate because it is adapting to what it is seeing in the hyperparameter space,” she added. “With HYPPO, we don’t have to select only a single architecture to evaluate in each iteration of the optimization, we can select multiple new architectures.”

Working hand in hand with surrogate modeling is asynchronous nested parallelism (ANP), a component of HYPPO that Dumont has been focused on. One of the challenges in carrying out HPO for machine learning applications is the computational requirement needed to train multiple sets of hyperparameters at the same time. By incorporating ANP into the software stack, HYPPO significantly reduces the computational burden of training complex architectures and quantifying the uncertainty by enabling the evaluation of multiple architectures asynchronously in parallel.

Wide-ranging Scientific Applications

These features make HYPPO a great fit for a variety of scientific applications. For example, in the MLHPC paper, the research team describes how HPO was used to enhance computed tomography (CT) image reconstruction. CT is a three-dimensional imaging technique that measures a series of two-dimensional projections – known as sinograms – of an object. Using HPO, the researchers were able to optimize a deep neural network architecture for sinogram inpainting (a method commonly used to alter or enhance digital images). Running HYPPO on the Cori supercomputer at NERSC, the missing angles of a sparsely sampled sinogram were filled in by a trained neural network, after which the completed sinogram could be reconstructed using any standard algorithm.

Another application in the works involves using HYPPO to train a generative adversarial network (GAN) model to replace a parameterized hadronization model used in HEP detector simulations to get better approximations and thus increase the computational efficiency of the simulation.

“Because we have this highly flexible nested and complex way of doing parallelization, it is something you can use to make the HPO much faster,” Dumont said. “Our software is a first step toward providing much needed reliable and robust models.”

HYPPO is implemented in the Python programming language and can be used with both TensorFlow and PyTorch libraries. More information about the software – including detailed documentation that is being updated “all the time,” according to Dumont – can be found at https://hpo-uq.gitlab.io/.

“If people are curious, they should try it out,” Mueller said.

In addition to Dumont and Mueller, authors on the MLHPC paper include Berkeley Lab’s Mariam Kiran and Talita Perciano; former summer interns Casey Garner, Anuradha Trivedi, and Chelsea Jones; former summer visiting faculty Vidya Ganapati; and former Berkeley Lab staff scientist Marc Day.

HYPPO is a result of a Berkeley Lab LDRD effort, “Uncertainty quantification for hyperparameter tuning," on which Mueller is the principal investigator and Kiran is the co-investigator.